Add websites to extract

First let’s set up your knowledge base. Each knowledge base contains a group of websites you want to extract.

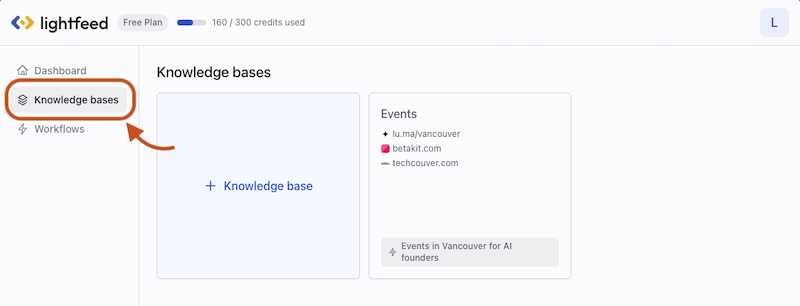

Step 1 - Add a new knowledge base

Click "Knowledge bases" on the Lightfeed left panel.

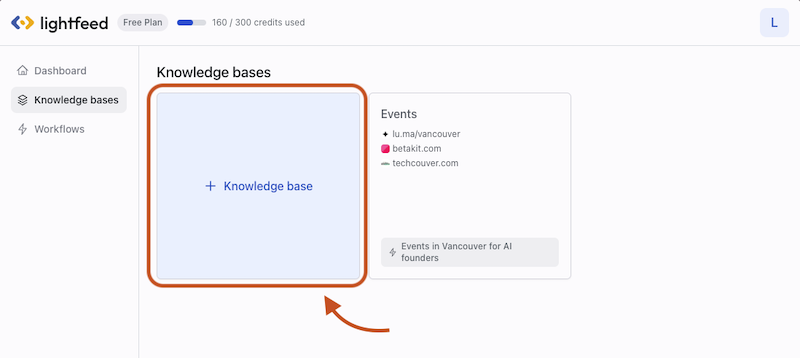

Click "+ Knowledge base".

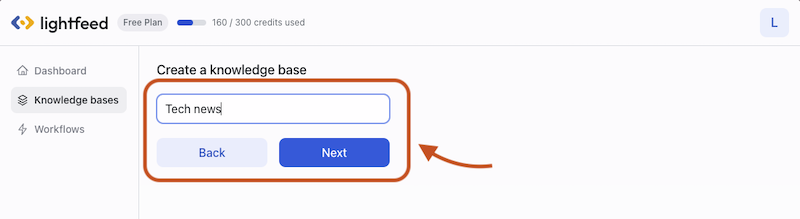

Give your knowledge a name, then click “Next”.

Step 2 - Add a website to extract

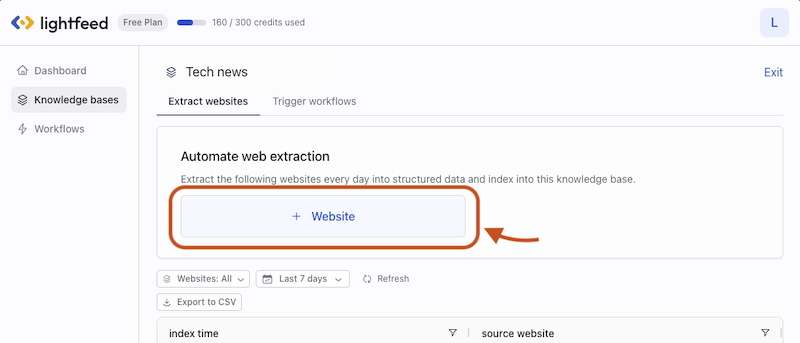

Click “+ Website” button to add a website you want to extract in this knowledge base.

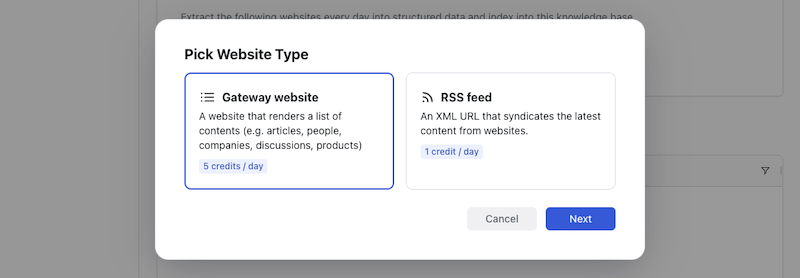

Choose websites type from “Gateway website” and “RSS feed”. Choose "Gateway websites" if you are extracting a list of items (e.g. articles, posts, discussions, products) from this website.

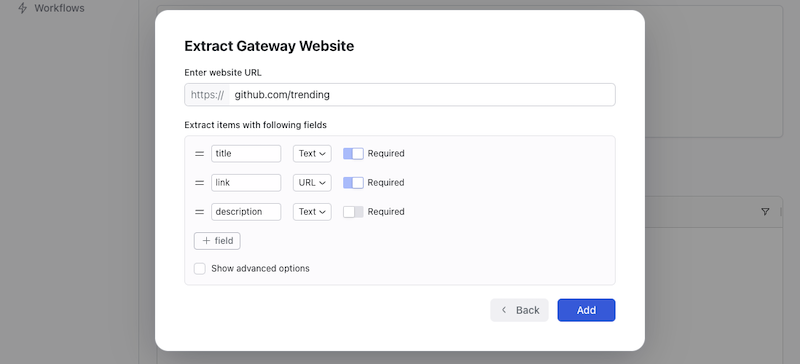

Step 3 - Input URL and define data schema

Input website URL. Define data format or schema you want to extract into. By default, we extract title (text), link (URL), description (text, optional).

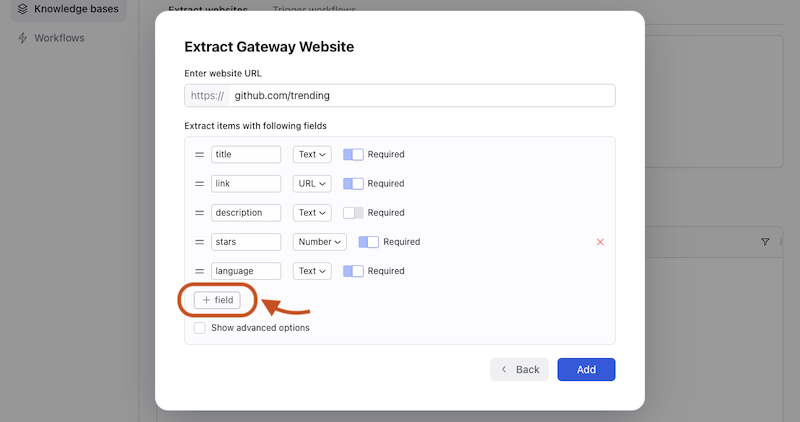

You can add new fields to extract (depending on different websites, this can be price, upvotes, author, comment link etc...). For this example, from github.com/trending, I want to extract number of stars and programming language of each project. So I added “stars” and “language” by clicking "+ field".

When all fields are added, click "Add".

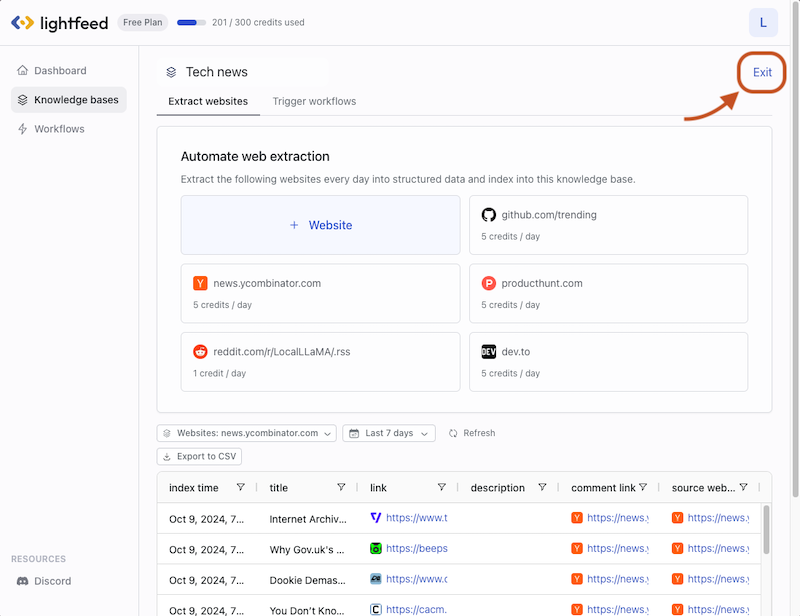

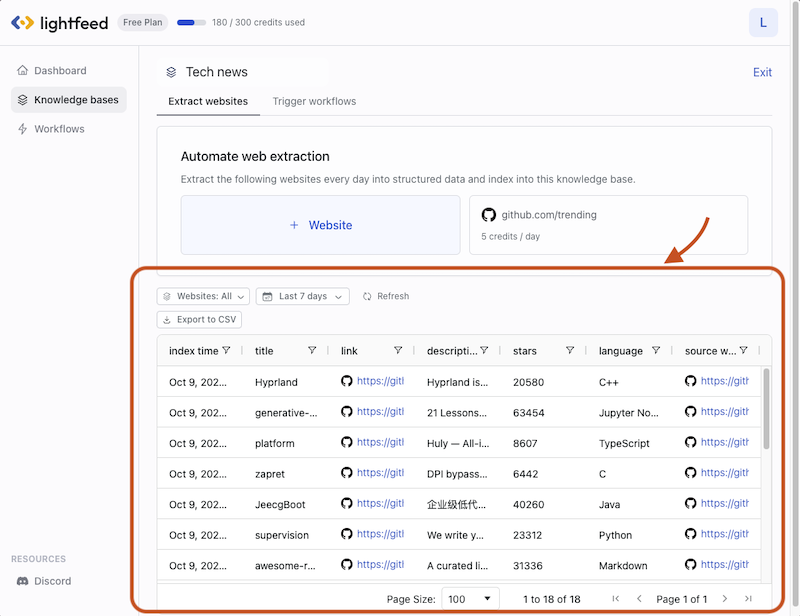

Step 4 - Extract websites and index into knowledge base

Lightfeed will scrape the website live, extract results into the schema you defined, and index the results to your knowledge base. This will take around 1 minute.

Once a website is added, Lightfeed will extract it every day, auto deduplicate and index new contents every day to keep your knowledge base up-to-date and consistent.

You can review the results that got indexed into the knowledge base.

Step 5 - Repeat 2-4 to add more websites

Repeat step 2-4 to extract more websites. Once done, click “Exit” on the right top corner.